Lawrence Livermore's El Capitan just ran the world's largest fluid dynamics simulation - 500 quadrillion degrees of freedom in hours.

Yet most aerospace teams still wait weeks for a single design iteration.

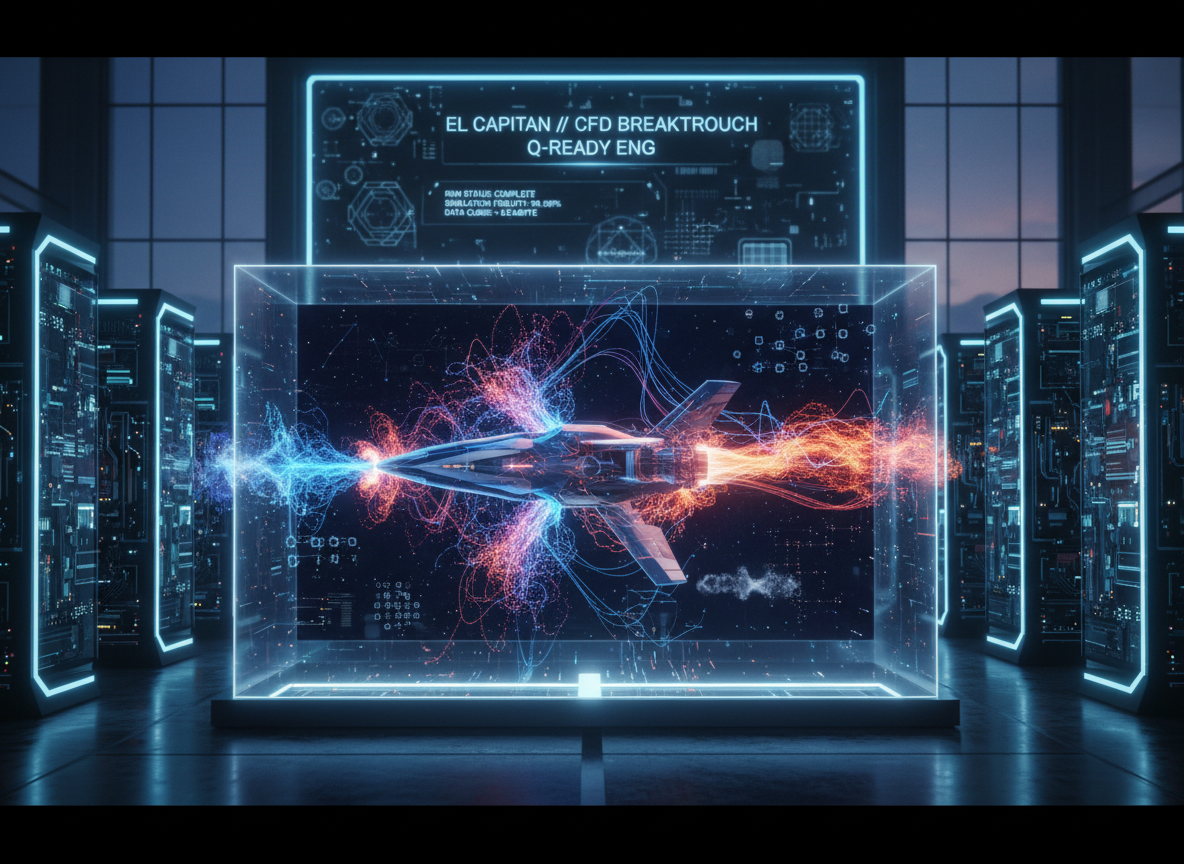

The Simulation That Redefined What's Possible in CFD

A Quick Snapshot of the El Capitan Breakthrough

This isn't incremental progress. For rocket plume CFD, crossing the quadrillion-variable threshold means capturing shock interactions and turbulent mixing that previous methods had to approximate away.

What Exactly Did Researchers Simulate?

- Rocket-rocket plume interactions: Clustered nozzles firing simultaneously, similar to SpaceX Super Heavy configurations, with highly compressible exhaust flows interacting mid-flight.

- Unprecedented resolution: Hundreds of trillions of grid points tracking pressure waves, shock structures, temperature gradients, and turbulence across the entire exhaust field.

- Full-system deployment: All 11,136 compute nodes on El Capitan - more than 44,500 AMD Instinct MI300A processors running in parallel.

- Efficiency gains validated: 80× faster time-to-solution, 25× lower memory footprint, and roughly 5x better energy efficiency compared to prior best-in-class approaches for equivalent physics.

These numbers tie directly to real aerospace design questions: how close can you cluster engines without destructive acoustic coupling? Where do exhaust plumes interfere with vehicle structures or nearby nozzles?

Why This Isn't Just Another "Big Number" Record

Spencer Bryngelson at Georgia Tech and collaborators at NYU and LLNL emphasize that this run validates both new mathematical methods and hardware simultaneously. The Information Geometric Regularization (IGR) technique they developed stabilizes violent shock interactions without smearing away the sharp gradients engineers need to see.

This proves exascale CFD can be faster and more energy efficient, not just bigger. It's a scientifically meaningful milestone, not a benchmark stunt designed to chase rankings.

Why Exascale Alone Won't Fix Everyday CFD

Hero Runs vs Daily Workloads

El Capitan's Gordon Bell-class demonstration is impressive, but it's a single snapshot.

Real aerospace programs demand dozens or hundreds of design iterations - varying injector geometry, throttle profiles, nozzle contours - before freezing a configuration.

Where teams still hurt today:

- Week-long or month-long queue times on shared national lab or cloud HPC clusters, especially for full-fidelity meshes.

- High cost of exploring edge cases: Running every design candidate at El Capitan resolution isn't practical for budget or scheduling constraints.

- Limited ability to probe off-nominal scenarios: Rare failure modes, transient startup sequences, or multi-physics coupling often get deferred because compute budget runs out.

Linear Solvers – The Silent Bottleneck

Most CFD wall-clock time hides inside solving huge sparse linear systems that arise from discretizing Navier-Stokes or Euler equations at each time step.

Why linear solvers matter more than core count:

Improving solver convergence by 2-3× can cut total runtime more effectively than doubling processor count, because you reduce the number of iterations needed per time step and avoid communication overhead that scales poorly beyond certain node counts.

Even on exascale hardware, inefficient linear algebra can leave GPUs waiting for data or struggling with ill-conditioned matrices. This is where quantum-ready algorithms start to show their value.

From Exascale to Quantum‑Ready CFD

Quantum-ready doesn't mean "waiting for fault-tolerant quantum computers."

It means designing algorithms today that perform well on classical hardware but map naturally to future quantum and hybrid quantum-classical workflows.

Characteristics of quantum-ready CFD:

- Matrix formulations amenable to block encodings: Representing sparse CFD matrices as quantum oracles, enabling compact circuit representations that scale logarithmically in problem size.

- Variational solvers compatible with near-term devices: Techniques like Variational Quantum Linear Solver (VQLS) that tolerate noise and run on current or near-future gate-based quantum processors.

- Data paths aligned with GPU-accelerated HPC: Hybrid workflows where classical preprocessing, quantum-inspired optimization, and quantum subroutines share memory and computation seamlessly, avoiding costly data marshalling.

Where BQP Fit In

BQP is a quantum-powered simulation company focused on making complex engineering workflows faster and more scalable.

BQP integrates quantum-inspired optimization and hybrid quantum-classical solvers into digital twin and CFD pipelines without requiring teams to overhaul existing HPC infrastructure.

Inside the BQP–NVIDIA–Classiq Collaboration

BQP recently partnered with NVIDIA and Classiq to demonstrate hybrid quantum-classical CFD and digital twin workloads running on CUDA-Q infrastructure, targeting the same class of linear systems that bottleneck traditional solvers.

How the workflow operates:

- Formulate CFD linear systems within BQPhy's pipeline - extracting sparse matrices from discretized PDEs, pressure Poisson equations, or implicit time-stepping schemes.

- Use Classiq to synthesize optimized quantum circuits - automatically generating VQLS ansätze or block-encoded oracle circuits tailored to the matrix structure and available qubit resources.

- Execute VQLS-based solvers on NVIDIA CUDA-Q - leveraging GPU acceleration for classical parameter optimization and quantum circuit simulation, or routing to real quantum hardware when beneficial.

- Feed results back into the digital twin or CFD workflow - updating velocity fields, pressure distributions, or design parameters for the next iteration without leaving the BQPhy environment.

Why This Matters More Than a Lab Demo

The collaboration achieved significant circuit-size reductions - fewer qubits, shallower depth, and lower parameter counts - compared to naive VQLS implementations. These improvements directly enable scaling CFD-like problems on near-term noisy quantum devices and GPU-accelerated simulators.

For engineering leaders:

Hybrid quantum-classical solvers can lower cost-per-simulation and expand design-space coverage. Instead of running one El Capitan-scale job per week, teams could run families of moderate-fidelity scenarios daily, identifying promising candidates before committing exascale resources to final validation runs.

How BQP's Hybrid CFD Research Attacks the Core Bottlenecks

BQP uses block encoding techniques to represent CFD matrices compactly on quantum circuits. Instead of loading each matrix element individually, block encoding constructs oracle-based access patterns that quantum algorithms can query efficiently.

Benefits for CFD:

- Shallower circuits compatible with noisy devices: Reducing gate depth lowers error accumulation on current NISQ hardware.

- More efficient usage of qubits and parameters: Compact representations mean smaller ansatz circuits in VQLS, accelerating classical optimization loops.

- Cleaner mapping from sparse matrices to quantum operators: Preserves sparsity structure, avoiding dense matrix penalties that plague naive quantum linear algebra approaches.

Stabilizing VQLS with Quantum‑Inspired Optimization

Quantum-Inspired Evolutionary Optimization (QIEO) helps VQLS training avoid barren plateaus - flat loss landscapes where gradient-based optimizers stall - ensuring reliable convergence on CFD benchmarks.

Early results on 1D Burgers' equation and 2D lid-driven cavity flows show classical-level accuracy with quantum-ready formulations. BQPhy's QIEO-VQLS solver matched reference solutions to within 1-2% relative error while using fewer circuit parameters and converging 3-5× faster than standard variational approaches.

From Rocket Plumes to Real‑World Digital Twins

El Capitan's rocket plume study directly addresses industrial pain points in launch vehicle design, hypersonics, and aero-thermal analysis - areas where turbulent, compressible flows dominate and experimental data is scarce or expensive.

Priority use-cases:

- Multi-engine launch vehicles and exhaust interactions: Understanding acoustic loads, thermal stress on interstage structures, and plume impingement on nearby nozzles or vehicle surfaces.

- Re-entry heating and shock-boundary-layer interactions: Predicting heat flux on thermal protection systems during hypersonic flight, where small modeling errors translate to hardware failures.

- Complex turbomachinery flows and aero-acoustics: Turbine blade aerodynamics, combustor instabilities, and jet noise prediction for commercial and military propulsion systems.

How Exascale and Quantum‑Ready Work Together

BQP complements exascale systems like El Capitan rather than competing. Use El Capitan for final validation runs at unprecedented resolution.

Use BQP for rapid design‑space exploration, sensitivity analysis, and early‑stage optimization—where speed and iteration count matter more than absolute fidelity.

What Engineering Teams Can Do Next

- Run PoCs on existing CFD benchmarks using BQPhy‑based workflows—start with lid‑driven cavity, backward‑facing step, or simplified nozzle flows to validate solver accuracy and integration effort.

- Identify linear‑solver‑heavy workloads as candidates for hybrid experiments—any CFD problem where iterative solvers consume >50% of runtime is a strong fit for QIEO‑VQLS or quantum‑inspired optimization.

- Collaborate with BQP on scoped pilots tied to real design decisions—bring a specific trade study or design iteration challenge, and we'll map it to BQPhy's hybrid solvers with measurable KPIs on speed, cost, and solution quality.

El Capitan's record proves exascale CFD is here. BQP's mission is to ensure your team can leverage quantum‑ready algorithms today—accelerating design cycles, lowering simulation costs, and building a clear path to quantum‑native solvers as hardware matures.

Explore BQP's research on hybrid CFD, read the NVIDIA–Classiq collaboration case study, or reach out to discuss how BQPhy can integrate into your workflow.

.png)

.png)

.svg)

.svg)

.svg)

.svg)